|

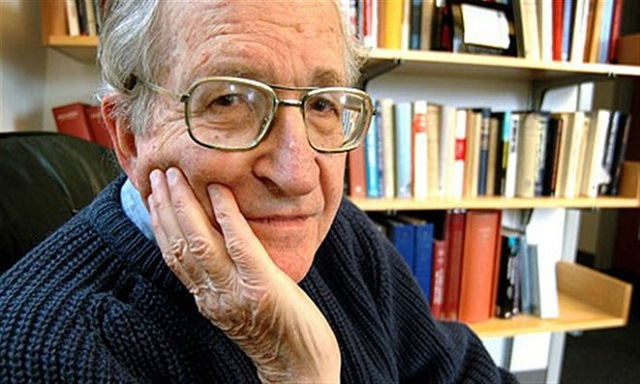

| ceasefiremagazine.co.uk Noam Chomsky |

My feeling is that Latinate grammars are an artificial construct applied to English, which we all should know to be a weird conglomeration of ancient Celtic mixed into a stew of German and French seasoned with both made-up words and a sprinkling of words from many other languages that changes faster than the OED can add new words and drop those whose fad has passed. Trying to force what amounts the foot of a platypus into a rigid Latin shoe strikes me as a very pure form of mental masturbation. A lot of fun while you're doing it, but what have you got when you're done?This research out of the University of Oregon was, at least to me, something most writers of any field would find interesting not just because of the result but what it added to the debate between adherents of a Latinate grammar and those who support a natural grammar.

So now it's becoming more and more clear that the ultimate grammar of English (and all other languages as well) resides in a small section of our brains that works to sort out sense and meaning without our being aware of the effort. Either a sentence makes sense; or it doesn't. Not based on artificial rules but whether our brain can derive a sense and meaning from what is said or written.

Here's the report, as always with a link to the full study in the attribution.

* * * * *

Chomsky was right: We do have a 'grammar' in our head

A team of neuroscientists has found new support for MIT linguist Noam Chomsky's decades-old theory that we possess an "internal grammar" that allows us to comprehend even nonsensical phrases. "One of the foundational elements of Chomsky's work is that we have a grammar in our head, which underlies our processing of language," explains David Poeppel, the study's senior researcher and a professor in New York University's Department of Psychology. "Our neurophysiological findings support this theory: we make sense of strings of words because our brains combine words into constituents in a hierarchical manner--a process that reflects an 'internal grammar' mechanism."

The research, which appears in the latest issue of the journal Nature Neuroscience, builds on Chomsky's 1957 work, Syntactic Structures (1957). It posited that we can recognize a phrase such as "Colorless green ideas sleep furiously" as both nonsensical and grammatically correct because we have an abstract knowledge base that allows us to make such distinctions even though the statistical relations between words are non-existent.

Neuroscientists and psychologists predominantly reject this viewpoint, contending that our comprehension does not result from an internal grammar; rather, it is based on both statistical calculations between words and sound cues to structure. That is, we know from experience how sentences should be properly constructed--a reservoir of information we employ upon hearing words and phrases. Many linguists, in contrast, argue that hierarchical structure building is a central feature of language processing.

In an effort to illuminate this debate, the researchers explored whether and how linguistic units are represented in the brain during speech comprehension.

To do so, Poeppel, who is also director of the Max Planck Institute for Empirical Aesthetics in Frankfurt, and his colleagues conducted a series of experiments using magnetoencephalography (MEG), which allows measurements of the tiny magnetic fields generated by brain activity, and electrocorticography (ECoG), a clinical technique used to measure brain activity in patients being monitored for neurosurgery.

The study's subjects listened to sentences in both English and Mandarin Chinese in which the hierarchical structure between words, phrases, and sentences was dissociated from intonational speech cues--the rise and fall of the voice--as well as statistical word cues. The sentences were presented in an isochronous fashion--identical timing between words--and participants listened to both predictable sentences (e.g., "New York never sleeps," "Coffee keeps me awake"), grammatically correct, but less predictable sentences (e.g., "Pink toys hurt girls"), or word lists ("eggs jelly pink awake") and various other manipulated sequences.

The design allowed the researchers to isolate how the brain concurrently tracks different levels of linguistic abstraction--sequences of words ("furiously green sleep colorless"), phrases ("sleep furiously" "green ideas"), or sentences ("Colorless green ideas sleep furiously")--while removing intonational speech cues and statistical word information, which many say are necessary in building sentences.

Their results showed that the subjects' brains distinctly tracked three components of the phrases they heard, reflecting a hierarchy in our neural processing of linguistic structures: words, phrases, and then sentences--at the same time.

"Because we went to great lengths to design experimental conditions that control for statistical or sound cue contributions to processing, our findings show that we must use the grammar in our head," explains Poeppel. "Our brains lock onto every word before working to comprehend phrases and sentences. The dynamics reveal that we undergo a grammar-based construction in the processing of language."

This is a controversial conclusion from the perspective of current research, the researchers note, because the notion of abstract, hierarchical, grammar-based structure building is rather unpopular.

Related stories:

WRITING, READING & TECHNIQUE

- Authors Use More Fear, Less Emotion Over Last 50 Years

- Comprehending Comprehension

- Conjunctions & Pronouns are the Language of Love

- Darwinian themes of survival in romance novels, pop songs and movie plot lines

- The Effect of Fictional Religions in Literature

- The Growing Epidemic of "Valley Girl Speak"

- How the Novels You Write May Actually Change the Biology of Your Reader's Brain

- How to Get Them Lost in Your Narrative

- How to Succeed as a Freelancer

- How to Tell if the Magazine You Write for can Survive Digital Competition

- Literary Creativity Down; Artistic Creativity Up Since 1990

- Reading Literary Fiction Improves 'Mind-Reading' Skills

- Seven commonly held myths about your brain

- The very real risks to women of 50 Shades of Grey

- Your Brain Spots Grammar Error You Might Miss

0 comments:

Post a Comment